In this comprehensive guide, we explore the top features of OpenArt AI in 2025—how they evolve, how they empower creators, and how you can leverage them for visual storytelling, branding, marketing, and creative workflows. We write from the perspective of an expert team (i.e. “we”) so you see how we’d apply these features in real-world creative pipelines and strategic content planning.

Table of Contents

- Introduction: Why OpenArt AI Matters in 2025

- The OpenArt Platform Ecosystem — At a Glance

- Feature Deep Dives

- Model Library & Multi-Model Support

- Character Creation & Consistency

- Image-to-Video & Video Tools

- Inpainting, Background Removal & Object Editing

- Upscaling & Resolution Enhancement

- Training Your Own Style / Custom Models

- Prompt Flexibility & Optional Prompt Workflows

- Creative Variations, Remixing & Iterations

- Team Collaboration & Business Features

- Commercial Licensing, Rights & Use Cases

- Use Cases: How Top Features Enable Creative Workflows

- Best Practices & Pro Tips

- Limitations, Challenges & What to Watch

- Conclusion: What the Top Features Mean for You in 2025

- Suggested Mermaid Diagram

- References & Suggested Visuals

1. Introduction: Why OpenArt AI Matters in 2025

In 2025, OpenArt AI and other generative AI platforms for visual creation are no longer experimental — they’ve become foundational for marketing, content creation, branding, advertising, entertainment, and artist workflows. As more users and creative teams adopt tools like OpenArt AI, differentiation now comes down to features, flexibility, consistency, and ease of use.

OpenArt AI has evolved into a full creative ecosystem, not just a simple “text-to-image” generator. It now supports multiple modalities (image, video, editing), character consistency, custom model training, prompt-optional workflows, and more. Its rapid growth and adoption signal that its feature set is resonating with creators and brands. (Sacra)

Thus, to stay ahead in 2025, knowing which features matter and how to use them is critical. This guide reveals the top features of OpenArt AI you should know in 2025, with tactical insights for power users.

2. The OpenArt Platform Ecosystem — At a Glance

Before we dive into individual features, it’s valuable to see how OpenArt’s modules fit together:

- The OpenArt AI ecosystem is built around several core modules that work together to enhance creativity and productivity:

- Creation / Generate Hub: The core OpenArt AI workspace for text-to-image or image-to-image generation.

- Editing Suite: Tools like inpainting, object removal, canvas expansion, and background replacement help refine your OpenArt AI creations.

- Character / Persona Module: Maintain consistent characters across outputs with OpenArt AI’s advanced identity control tools.

- Video Module: Transform still images into motion using OpenArt AI features such as lip sync, cinematic transitions, and scene animation.

- Model Training / Custom Style: Train your own style or model directly inside OpenArt AI to develop a unique visual signature.

- Variation / Remix Module: Explore alternate versions and creative directions effortlessly within OpenArt AI.

In effect, OpenArt AI is not just a “generator” but an integrated creative platform.

3. Feature Deep Dives

Below we examine each top feature, its capabilities, best uses, and pro tips.

3.1 Model Library & Multi-Model Support

One of OpenArt’s core strengths is its expansive library of AI models and the flexibility to pick or switch between them.

Capabilities & Highlights

- Over 100 distinct models available, spanning photorealistic, fantasy, anime, abstract, and niche styles. (mimicpc.com)

- Integration of new models such as Nano Banana, FLUX Kontext, Kling 2.1, Veo 3, WAN 2.2, Ideogram V3, etc., offering specialized performance improvements. (OpenArt)

- Ability to select model per generation, mix model types, or chain models (for example, initial sketch with one model, polish with another).

- Optional “model blending” or weighting features to merge style traits from two or more models.

Best Use Cases

- For concept art: use stylized or fantasy models

- For marketing/promotional content: photorealistic or hyperrealism

- For stylized brand identity: mix specific models to maintain visual consistency

- For experimentation: quickly switch between models to compare outputs

Pro Tips

- Test lightweight models first to preview results faster before deploying heavy models.

- Save your model selections (or favorite lists) for reuse in later prompts.

- Use model chaining: e.g. generate rough shapes with fast model, then refine with high-fidelity model.

- Document model-to-use-case mapping (e.g. Model A for characters, Model B for architecture) to streamline your workflow.

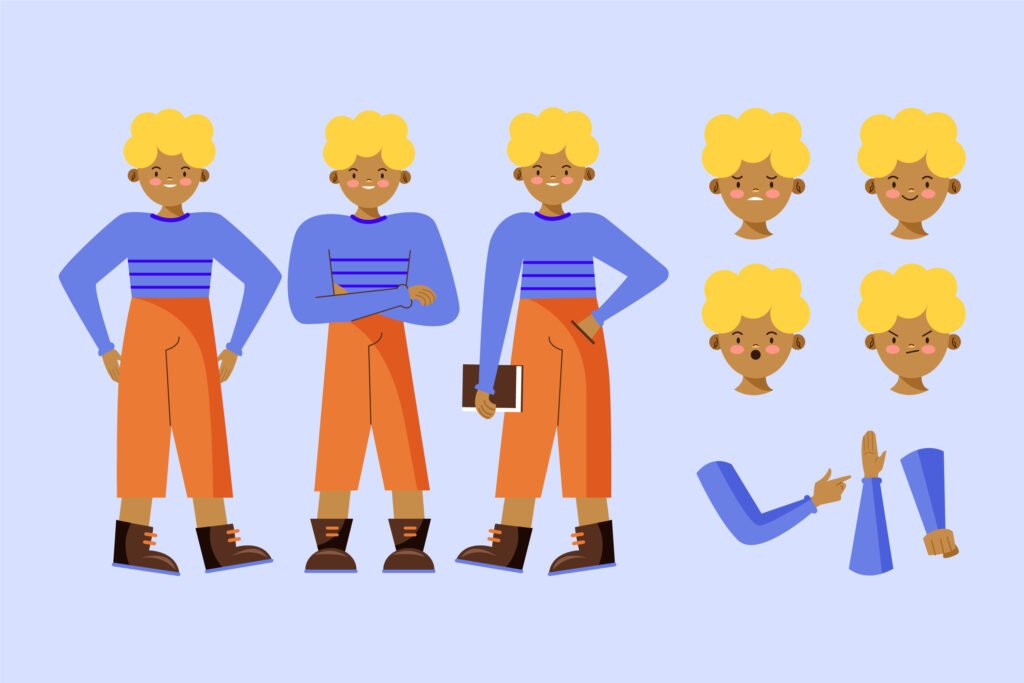

3.2 Character Creation & Consistency

One of OpenArt’s standout features is its ability to create and manage consistent characters across multiple outputs. This is a differentiator many AI art tools still struggle with.

Core Abilities

- Create a character from a text description, a single reference image, or a set of 4+ images. (mimicpc.com)

- Train a “character model” in roughly 10 minutes (depending on plan) to embed the character in future generations. (mimicpc.com)

- Tools to lock the character identity so pose, style, clothing, etc. remain consistent even if the scene or background changes. (mimicpc.com)

- Pose Editor / OpenPose integration to articulate skeletons and control body posture. (mimicpc.com)

- Scene compositing: place your character in new environments or backgrounds, with background matching or lighting adjustments. (mimicpc.com)

- Support for multiple characters in a single frame, each with identity maintenance. (OpenArt)

Use Cases

- Visual novels, comics, or illustrated stories created with OpenArt AI benefit from strong character coherence and identity control.

- Branding mascots, avatar systems, or signature characters built using OpenArt AI maintain consistent visuals across all marketing campaigns.

- OpenArt AI makes it easier to design product ad mockups with consistent characters — for example, a model wearing different clothing variations.

- Animation drafts or storyboards developed in OpenArt AI ensure that the central character remains recognizable throughout all frames.

Pitfalls & Pro Tips

- In OpenArt AI, character consistency is often best achieved when starting with multiple reference images (from varied angles) rather than just one.

- The OpenArt AI Pose Editor is powerful but can sometimes be imprecise; manual prompt adjustments may be needed for best results.

- Lighting and color consistency in OpenArt AI projects matter greatly — ensure your reference images have similar lighting to target scenes so embedding and blending work more naturally.

- When composing backgrounds, use mask / blending hints to avoid artifacts where the character intersects with objects.

3.3 Image-to-Video & Video Tools

In 2025, motion is becoming essential. OpenArt supports video generation, image transitions, lip sync, and cinematic flows.

Key Features & Updates

- TIn OpenArt AI, tools like Kling 2.1, WAN 2.2, and Veo 3 enable higher-quality video generation, smoother motion, better prompt alignment, and cinematic camera behavior.

- OpenArt AI provides Start & End Frame Control to define a start and end frame for seamless transitions or morph effects.

- With OpenArt AI, creators can use frame interpolation up to 120 FPS and upscale video resolution to 8K (7680×4320) for professional-grade clarity.

- The OpenArt AI Lip Sync feature lets you generate speech synchronized to character mouth movements using audio prompts or uploaded sound.

- Using OpenArt AI’s Grab Frame to Video tool, you can animate parts of still images—like drifting clouds or rippling water—by selecting specific frame regions.

- One-Click Story: supply a script, beat, or storyboard, and the tool forms a 1-minute video complete with transitions, music, narrative structure. (OpenArt)

- Automatic upsampling for video (e.g. Topaz Labs integration) to preserve clarity at high resolution. (OpenArt)

Applications

- Create social media reels and promotional short videos effortlessly using OpenArt AI’s video generation tools, which ensure smooth transitions and cinematic effects.

- Design animated intros, outros, and brand video snippets within OpenArt AI to enhance visual storytelling and brand identity.

- Produce cinematic mood pieces derived from still artwork using OpenArt AI’s motion and scene animation features, giving static visuals life and depth.

Best Practices & Tips

- When using OpenArt AI, start with short clips (5–10 seconds) to test transitions and motion dynamics effectively.

- In OpenArt AI’s video module, use motion hints in prompts (e.g. “camera pushes in slowly”, “fade into dark”) to enhance cinematic flow and storytelling.

- Combine OpenArt AI’s video generation with its character consistency tools to animate your previously trained characters seamlessly for brand or story continuity.

- Experiment with frame blending methods to reduce jitter in morphological transitions.

- When generating lip sync, use clear audio voice tracks (less noise) and shorter utterances to minimize misalignment.

3.4 Inpainting, Background Removal & Object Editing

A powerful editing suite complements generation. OpenArt prioritizes high-fidelity editing tools to refine and fix outputs.

Core Editing Tools

- Inpainting / Local Editing: select any region to replace or regenerate, while preserving surrounding context. (OpenArt)

- Background Removal / Change / Replacement: isolate foreground subject and swap background. (OpenArt)

- Object Removal / Find & Replace: erase unwanted elements, or replace them with new ones. (OpenArt)

- Image Expand / Canvas Extension: extend scenes beyond original borders, with synthesized completion. (OpenArt)

- Facial Expression & Feature Controls: adjust expressions, gaze, facial features on characters. (OpenArt)

- Color Palette Control / Style Transfer: remap image to palette constraints or re-style existing images. (SaaSworthy)

Use Cases & Benefits

- Fixing artifacts or undesired elements from generated output

- Iterative refinement: generate a base image, then edit fine details

- Compositing characters into real-world photography

- Recoloring or thematic re-styling without full regeneration

Pro Tips

- Use masks / precise selection brushes to isolate areas to edit and avoid bleeding artifacts.

- When extending canvas, supply “hint imagery” or cues for backgrounds to maintain coherence.

- For background replacement, match the lighting direction, color tone, and perspective to avoid mismatch.

- Use edit history / step reversible controls so you can revert a bad edit.

- Combine inpainting with prompt hints (e.g. “inpaint the upper right corner with forest background”) for greater control

3.5 Upscaling & Resolution Enhancement

Resolution matters: whether for printing, large-format displays, or crisp digital media, OpenArt’s upscaling tools are essential.

Key Capabilities

- Resolution Increaser (Upscaler): available as a free tool, enhancing detail and clarity from lower-resolution images. (OpenArt)

- Modes: “Precise mode” (faithful fidelity) vs. “Creative mode” (adds artistic detail) (OpenArt)

- Upscaling support to incorporate mask, region scaling, and selective upscaling

- Integration of Topaz Labs or other high-end upscaling engines for video upscaling too (to 8K) (OpenArt)

Practical Use Cases

- Finalizing images for print (posters, large banners)

- Delivering client assets with ultra-high resolution

- Upscaling video exports to match distribution standards

- Preserving quality when cropping or zooming

Best Practices

- Always retain the original generation before upscaling, so you can compare or revert.

- Choose upscale level (2×, 4×, 8×) based on target output destination (web, print, billboard).

- Use creative upscaling when you want artistic texture enhancements; revert to precise for brand-critical consistency.

- For video, upscaling after motion interpolation often gives smoother results.

3.6 Training Your Own Style / Custom Models

Perhaps the most powerful feature: the ability to train personal styles or custom models that reflect your brand, art vision, or character identity.

Feature Highlights

- With OpenArt AI, you can upload 4 to 128 example images to train style, character, face, or object models efficiently.

- OpenArt AI uses advanced optimization techniques to ensure high-quality performance even with fewer samples — making it ideal for data-efficient training.

- In OpenArt AI’s model training module, users can tweak architecture, parameters, and optimization settings for advanced creative workflows.

- OpenArt AI also lets you keep your trained model private or share it with the wider community marketplace.

- These custom models integrate seamlessly with OpenArt AI’s generation engine, allowing you to call your personalized styles directly in prompts.

Strategic Use Cases

- Build a brand visual signature: all outputs conform to a consistent style

- For character-based projects, your own character model yields stronger identity retention

- Create genre-specific models (e.g. a dystopian sci-fi aesthetic) and reuse across projects

- Client-specific models: deliver client projects where the style is bespoke to the client

- Prototyping: train a quick model to test creative direction before committing to full production

Tips & Warnings

- Provide diverse reference angles, lighting, environment in your training images to improve generalization.

- Monitor overfitting—if your model reproduces exactly only the training set but fails general prompts, retrain with more variation.

- Use validation images outside training set to gauge model robustness.

- Document metadata: model name, training set, settings, version — especially in team environments.

- Periodically retrain or fine-tweak as the model drifts or your style evolves.

3.7 Prompt Flexibility & Optional Prompt Workflows

Unlike rigid prompt-only systems, OpenArt offers prompt-optional workflows and hybrid inputs, giving creators flexibility in how they interact with AI.

Highlights & Features

- You can skip the prompt entirely in some workflows, using sketch, reference image, or template.

- Hybrid input: combine text + sketch + reference image to guide the generation.

- Pre-configured workflows (e.g. sketch-to-image, mask fill, remix) where prompt input is optional. (No need to craft long prompt metadata).

- Prompt templates library and parameter presets to accelerate prompt construction. (Powerusers AI)

- Support for multilingual prompts (enter prompts in any language) with model support. (OpenArt)

Benefits & Use Cases

- Lower barrier for non-technical creators or marketers who don’t want to craft prompts

- Faster iteration: you sketch rough forms and let AI flesh them out

- Mixed modalities allow more creative control: you supply shape + context and let AI fill style

- Useful for rapid prototyping: skip toward visuals faster

Best Practices

- Even when skipping prompt, always experiment with adding minimal prompt hints — sometimes small adjustments yield large improvements.

- For hybrid input, prioritize clarity: label which reference is shape and which is color-style or lighting.

- Use prompt templates as scaffolding, then modify key parameters to fine-tune.

- Save successful prompt + input combinations (“recipes”) for reuse.

3.8 Creative Variations, Remixing & Iterations

Once you’ve got a result you like, extending or remixing it is essential. OpenArt supports robust variation workflows.

Feature Abilities

- Creative Variations: branching off from a base image into alternate versions (pose, style, color, lighting). (SaaSworthy)

- Remixing / Re-generating: re-prompt around existing images or segments with modifications.

- Supply masked edits to one portion while keeping rest fixed.

- Save iteration history to compare branches.

- Combine variations with model blending to explore visual directions.

How We Use It

- From an initial hero image, spin off 5 alternate versions and compare for social media A/B testing

- Create seasonal or theme-based variants (e.g. same composition but winter vs summer)

- Use remixes to explore mood or color shift, then pick your favorite branch

- For client review, show variation sets to solicit feedback

Tips

- Limit branching depth (e.g. 2–3 levels) to avoid combinatorial explosion

- Use variation hints in prompt (e.g. “make cold lighting”, “shift to dusk”)

- When remixing, always provide context — include prompt + original image for coherent output

- Use the iteration history to backtrack when a variation drifted too far

3.9 Team Collaboration & Business Features

OpenArt is not just for solo creators — it supports team workflows, billing, and permission control.

Business / Team Features

- Team Billing & Multi-Seat Access: invite members under a unified subscription. (OpenArt)

- Role-based access controls: admins, editors, viewers (depending on plan)

- Shared model libraries, shared prompt templates, shared style assets

- Generation quotas and budget controls per user

- Project-level structuring: separate spaces for different campaigns or clients

- Priority support and service-level features for higher plans. (SaaSworthy)

Use Cases

- Design agency uses OpenArt internally and across client projects

- Marketing teams coordinate visuals, share templates, and maintain brand assets

- Multiple stakeholders review variant images, give feedback, and iterate

- Controlled credit allocation per department (e.g. social, UX, ads)

Tips

- Establish a style library that the team must reference to maintain coherence

- Use versioning and naming conventions (project_2025_campaignX_v1, etc.)

- Monitor credit usage per seat to avoid overrun

- Regularly export or archive key assets to local storage for backup

3.10 Commercial Licensing, Rights & Use Cases

One of the major questions with AI-generated content is: Who owns what, and can I use it commercially?

Licensing & Rights

- OpenArt supports commercial use of generated images, subject to model-specific licensing terms. (tooljunction.io)

- Some models may have restrictions — always check the model’s license or terms before client deliverable usage.

- For custom-trained models, ownership of style/model usually belongs to you (user) if the training assets are your own or properly licensed.

- Attribution is typically optional, but you may choose to credit when using community-shared models.

Use Cases (Commercial)

- Marketing campaigns: banners, ads, visuals for social media

- Branding and identity: logos, style guides, signature visuals

- Editorial content: feature illustrations for articles, covers

- Merchandise & print: posters, T-shirts, brand collateral

- Animation & video: short brand narratives, intros, motion visuals

Tips to Mitigate Risk

- Retain all training assets and prompt logs in case of audit

- For client work, deliver both the raw file and prompt metadata for transparency

- Avoid using models trained on copyrighted materials without permission

- When licensing third-party models, maintain license records

4. Use Cases: How Top Features Enable Creative Workflows

Here we map how these features combine in practical workflows:

- Brand Identity Funnel: Train a custom style, generate hero visuals, branch variations for campaigns, animate short video ads, maintain consistent characters across seasons.

- Social Content Pipeline: Use prompt-optional workflows to sketch post layouts, generate multiple visuals fast, upscaling for print, remixing for A/B.

- Novel / Comic Project: Start by training character models, build scene visuals, composite backgrounds, animate panel transitions, export assets.

- Client Deliverables: Set up team seats, enforce style guidelines, produce high-res visuals, export both static and short motion, maintain version tracking.

- Rapid Prototyping for Pitch: Use sketch input + prompt, generate visuals fast, iterate, present candidate options, pick direction, refine.

5. Best Practices & Pro Tips

- Start with strong references — better input yields stronger outputs.

- Iterate in small steps — generate, edit, remix; avoid trying to do everything in one shot.

- Document your prompts, models, and settings — you’ll want to reproduce or tweak later.

- Use hybrid inputs (sketch + prompt + reference) for more precise control.

- Monitor your credit usage — big video or high-res generation can burn credits.

- Batch generate variations for A/B testing or creative exploration.

- Use post-processing in external tools (e.g. Photoshop) for final polish, if needed.

- Maintain backups of original generations, training sets, and version history.

- Test consistency by embedding your character into new environments periodically.

- Stay current with new model releases — OpenArt regularly updates and adds new models. (OpenArt)

6. Limitations, Challenges & What to Watch

While OpenArt is powerful, no tool is flawless. Here are challenges and caveats:

- Artifactual artifacts: character limbs may misalign, odd geometry may appear.

- Consistency drift: beyond certain complexity, character consistency may fail.

- Prompt ambiguity: vague prompts lead to unpredictable outputs.

- Model licensing restrictions: not all models permit commercial usage.

- Credit costs: high-res, long videos, and heavy usage can become costly.

- Overfitting on custom models: too narrow training data may reduce generalization.

- Latency / generation time: complex jobs (e.g. high-res video) may take time.

- Evolving UI / features: changes to interface or pricing may occur, so stay adaptable.

7. Conclusion: What the Top Features Mean for You in 2025

In 2025, the difference between generic OpenArt AI art and strategic, brand-aligned visual storytelling lies precisely in feature mastery. The top features of OpenArt AI — model diversity, consistent characters, video generation, editing tools, upscaling, custom model training, collaborative infrastructure, and prompt flexibility — collectively give creators, marketers, designers, and agencies the power to build visual ecosystems, not just images.

By mastering these features, you can:

- Maintain visual identity across media

- Iterate faster and more reliably

- Deliver high-resolution final assets

- Animate and engage audiences with motion

- Scale team-based production with governance

- Build brand assets that are unique and protected

If you integrate these features thoughtfully into your creative pipeline, your visual output becomes a competitive advantage.

8. Suggested Mermaid Diagram

flowchart LR

A[Prompt / Sketch / Reference Input] –> B[Model Selection / Generation]

B –> C[Base Output]

C –> D[Editing Suite (Inpaint / Remove / Background / Expand)]

D –> E[Variation / Remix]

E –> F[Upscaling / High Res Output]

F –> G[Video Module (Morph / Lip Sync / Transitions)]

G –> H[Team / Library / Export]

A –> I[Custom Model Training]

I –> B

This diagram shows how inputs feed generation, flow through editing and variation, then upscaling or video, all within the unified platform. Training your own models loops back into generation.

9. References & Suggested Visuals

Suggested Visuals

- Architecture diagram of OpenArt modules

- Screenshot of model selection UI

- Before/after character consistency example

- Inpainting / background replacement illustration

- Video frame transition example

- Upscaling comparison (low res → high res)

- Workflow flowchart (mermaid above rendered as image)

Key Reference Sources

- OpenArt “What’s New” page, listing latest features (OpenArt)

- OpenArt features overview (inpainting, editing, etc.) (OpenArt)

- OpenArt Review & features analysis (mimicpc.com)

- OpenArt train-your-own-style feature page (OpenArt)

- OpenArt pricing / feature breakdown (SaaSworthy)

- Business / revenue growth insight (Sacra)

We believe this deep, feature-oriented, and workflow-aware exploration of the top features of OpenArt AI you should know in 2025 gives you not just knowledge but actionable insight. Use it to build visual strategies, craft creative pipelines, and unlock the full potential of OpenArt in 2025.